The Bottleneck in Behavioral Research

Designing a rigorous psychology experiment is notoriously time-consuming. Researchers must navigate complex decisions: selecting appropriate measures, determining sample sizes, controlling confounds, and creating valid stimuli. A single study can take months to design before data collection begins [1].

The replication crisis has only raised the stakes. Underpowered studies, questionable research practices, and poorly specified hypotheses have undermined confidence in psychological science [2]. Rigorous methodology is no longer optional—it's essential.

Psychology Experiment Designer

The Psychology Experiment Designer transforms a research question into a complete, publication-ready study protocol. From a single input—the researcher's hypothesis—the system generates everything needed for rigorous experimentation.

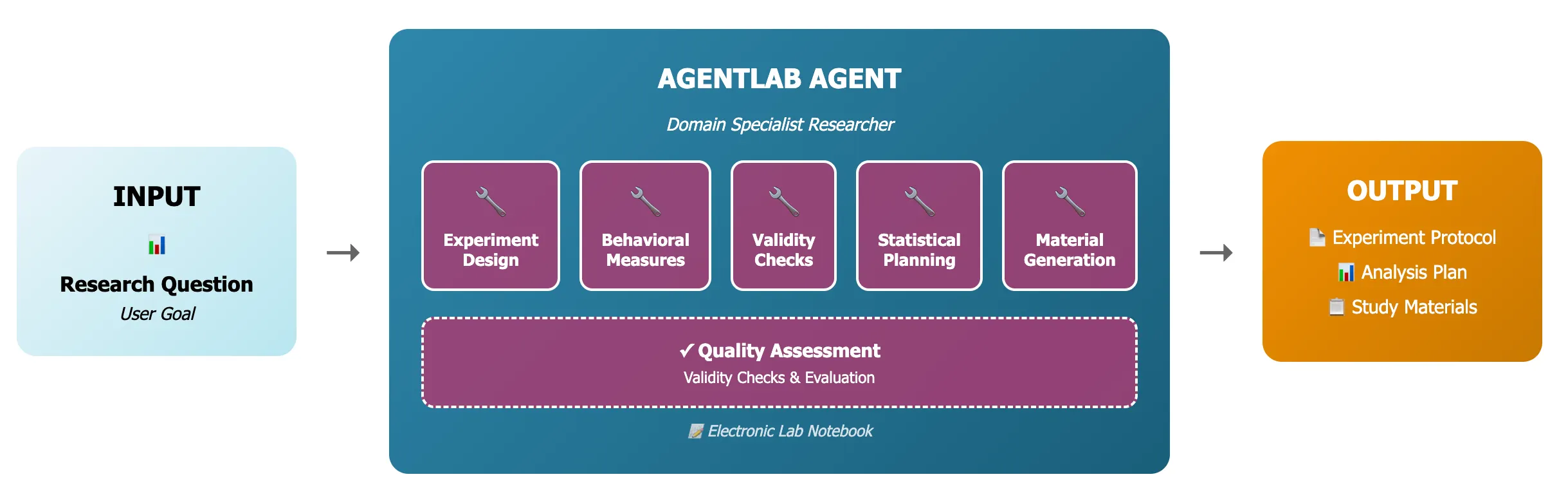

Figure 1: The agent pipeline transforms a research question through experiment design, behavioral measures, validity checks, statistical planning, and material generation to produce publication-ready outputs.

Figure 1: The agent pipeline transforms a research question through experiment design, behavioral measures, validity checks, statistical planning, and material generation to produce publication-ready outputs.

Core Capabilities

Experiment Design: Structures studies with appropriate controls, randomization schemes, and counterbalancing based on the research question type (between-subjects, within-subjects, mixed designs) [3].

Behavioral Measures: Recommends validated scales and instruments from established repositories, matching constructs to psychometrically sound measures with known reliability coefficients.

Validity Checks: Identifies threats to internal and external validity, suggesting attention checks, manipulation checks, and exclusion criteria aligned with best practices [4].

Statistical Planning: Conducts a priori power analyses to determine required sample sizes, specifying effect sizes based on meta-analytic estimates and planned analytical approaches.

Material Generation: Produces deployable survey instruments, consent forms, and debriefing materials ready for IRB submission and online platforms.

From Hypothesis to Protocol

Input: "Does exposure to nature imagery reduce stress reactivity compared to urban imagery?"

Output:

- 2x2 mixed factorial design (imagery type x time)

- Validated stress measures (PSS-10, cortisol proxy, STAI-State)

- Power analysis: n=128 for medium effect (d=0.5) at 80% power

- Attention checks and manipulation verification items

- Complete Qualtrics-ready survey with randomized image presentation

What traditionally requires weeks of literature review and methodological planning becomes available in minutes.

Quality by Design

The system embeds methodological rigor at every step:

- Pre-registration ready: Outputs align with OSF pre-registration templates

- Transparent reporting: Generates methods sections following APA and JARS guidelines [5]

- Reproducibility focus: Documents all design decisions for replication

Applications

Academic Researchers: Accelerate study design while maintaining rigor

Graduate Students: Learn experimental design principles through guided protocol generation

Industry R&D: Rapid user research and behavioral testing with scientific validity

Replication Projects: Quickly generate protocols for direct and conceptual replications

The Future of Behavioral Science

As psychology confronts its methodological challenges, tools that embed best practices into the research workflow become invaluable. The Psychology Experiment Designer doesn't replace scientific judgment—it amplifies it, ensuring that rigorous methodology is accessible to every researcher.

References

[1] Munafò MR, et al. A manifesto for reproducible science. Nature Human Behaviour. 2017;1:0021.

[2] Open Science Collaboration. Estimating the reproducibility of psychological science. Science. 2015;349(6251):aac4716.

[3] Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Houghton Mifflin; 2002.

[4] Oppenheimer DM, Meyvis T, Davidenko N. Instructional manipulation checks: Detecting satisficing to increase statistical power. Journal of Experimental Social Psychology. 2009;45(4):867-872.

[5] Appelbaum M, et al. Journal article reporting standards for quantitative research in psychology. American Psychologist. 2018;73(1):3-25.